Bandwidth Allocation in Datacenter Networks

Web service providers like Google and Facebook have built large scale datacenters to host many computationally intensive applications, ranging from PageRank to machine learning. In order to efficiently proceed a large volume of data, these applications typically embrace data parallel frameworks, such as MapReduce. In general, data parallel applications proceed in several computation stages that require communication between them. During the communication stage, link bandwidth is heavily demanded by constituent flows to transfer the intermediate data across the datacenter network. With MapReduce as an example, input data is first partitioned into a set of splits, so that they can be processed in parallel with map computation tasks. The map tasks produce intermediate results, which are then shuffled over the datacenter network to be processed by reduce computation tasks.

It is commonly accepted that bandwidth should be shared in a fair manner. However, traditional wisdom on fair bandwidth sharing is not applicable to the context of private datacenters shared by multiple data parallel applications, for the following two reasons. First, traditional fairness has largely focused on datacenters in a public cloud, which regulates that bandwidth on a link should be allocated fairly across different flows, pairs of virtual machines (VMs), or tenants according to their payments. Such payment proportionality is not applicable to private datacenters. Second, traditional mechanisms do not account for the characteristic of data parallel applications: the transfer time of an application is determined by the completion time of the slowest flow. Lacking awareness of such application characteristic may result in bandwidth wastage, since the amount of bandwidth allocated to a faster flow does not make any improvement of the application performance.

To fill this gap, we propose the notion of performance-centric fairness that is specifically customized for bandwidth allocation among data parallel applications in private datacenters. The guiding principle is that the application performance, defined as the reciprocal of the transfer time, should be proportional to their weights. To put it simply, applications with equal weights sharing the same private datacenter should enjoy the same performance.

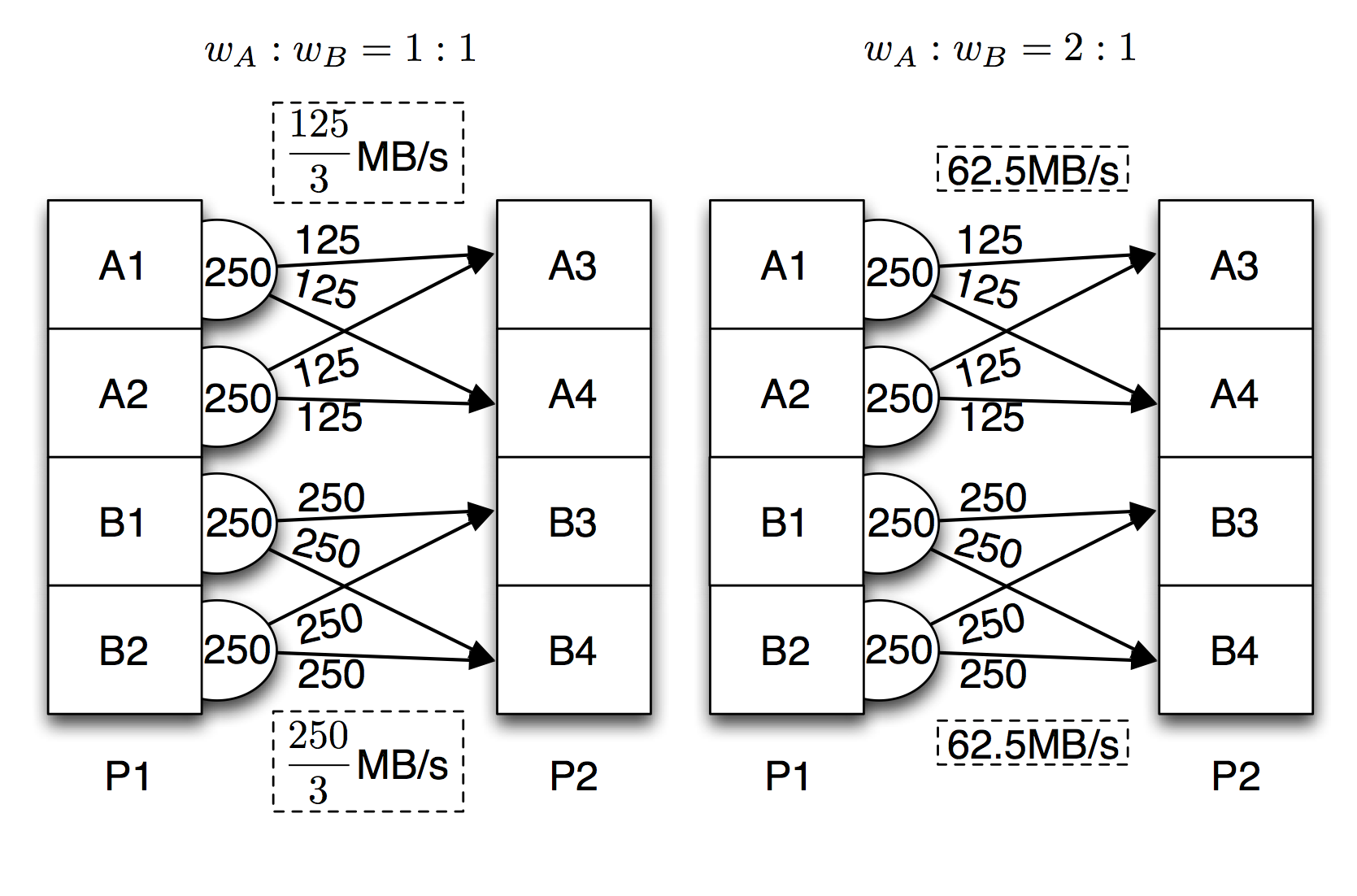

The figure below presents two illustrative examples of weighted performance-centric allocation. A is a MapReduce task with the shuffle communication pattern, the data produced by each map task, A1 and A2, is partitioned into two equal sets, each with a size of 125 MB, to be sent to both A3 and A4. In contrast, B uses the broadcast communication pattern, where each task in the first stage, B1 and B2, broadcasts all of its produced data, with a size of 250 MB, to both tasks in the second stage, B3 and B4. A1, A2 are collocated with B1, B2 on the same physical machine P1, sharing the egress link bandwidth of 500 MB/s. Similarly, A3, A4 and B3, B4 share the ingress link bandwidth (500 MB/s) of P2. According to their importance, A and B are assigned weights of wA and wB, respectively. For case (1), each flow of A is allocated 125/3 MB/s, and each flow of B is allocated 250/3 MB/s, so that A and B with the same weight can achieve the same transfer time. For case (2), all flows are allocated 62.5 MB/s, so that the transfer time of A is half that of B, which indicates that the performance of A is twice the performance of B, corresponding to their weight proportionality 2:1.

Figure: Examples of bandwidth allocation achieving weighted performance-centric fairness in two cases: (1) both applications have the same weight; (2) the applications have different weights.

In a general scenario, we investigated the problem of maximizing application performance while maintaining strict performance-centric fairness and present the inherent tradeoff between resource utilization and fairness. We then formulated the link bandwidth allocation problem with the objective of maximizing social welfare across all applications, so that resource utilization can be manipulated and improved by allowing a tunable degree of relaxation on performance-centric fairness. Based on dual based decomposition, we designed a distributed algorithm to solve this problem. Authored by Li Chen, this work has been published in the Proceedings of IEEE INFOCOM 2014.