Multi-Resource Scheduling

Queueing algorithms determine the order in which packets in various independent flows are processed, and serve as a fundamental mechanism for allocating resources in a network appliance. Traditional queueing algorithms make scheduling decisions in network switches that simply forward packets to their next hops, and link bandwidth is the only resource being allocated.

In modern network appliances, e.g., middleboxes, link bandwidth is no longer the only resource shared by flows. In addition to packet forwarding, middleboxes perform a variety of critical network functions that require deep packet inspection based on the payload of packets, such as IP security encryption, WAN optimization, and intrusion detection. Performing these complex network functions requires the support of multiple types of resources, and may bottleneck on either CPU or link bandwidth. For example, flows that require basic forwarding may congest the link bandwidth, while those that require IP security encryption need more CPU processing time. A queueing algorithm specifically designed for multiple resources is therefore needed for sharing these resources fairly and efficiently.

Multi-Resource Fair Queueing

Fairness offers predictable service isolation among flows. It ensures that the service (i.e., throughput) a flow receives is at least at the level when every resource is evenly allocated, independent of the behavior of rogue flows. The notion of Dominant Resource Fairness (DRF) embodies this isolation property, with which each flow receives approximately the same processing time on its dominant resource, defined as the one that requires the most packet processing time.

To implement DRF for packet processing, we have designed new multi-resource fair queueing schemes that schedule packets in O(1) time with bounded scheduling delay. These works have been published in IEEE ICNP 2013 and IEEE INFOCOM 2014.

Fairness-Efficiency Tradeoff

While fairness is a desirable property to pursue, efficiency serves as another important metric measuring the resource utilization achieved by a queueing algorithm. High resource utilization naturally translates into high traffic throughput. This is of particular importance to enterprise networks, given the surging volume of traffic passing through middleboxes.

Both fairness and efficiency can be achieved at the same time in traditional single-resource fair queueing, where bandwidth is the only concern. As long as the schedule is work conserving, bandwidth utilization is 100%. That leaves fairness as an independent objective to optimize.

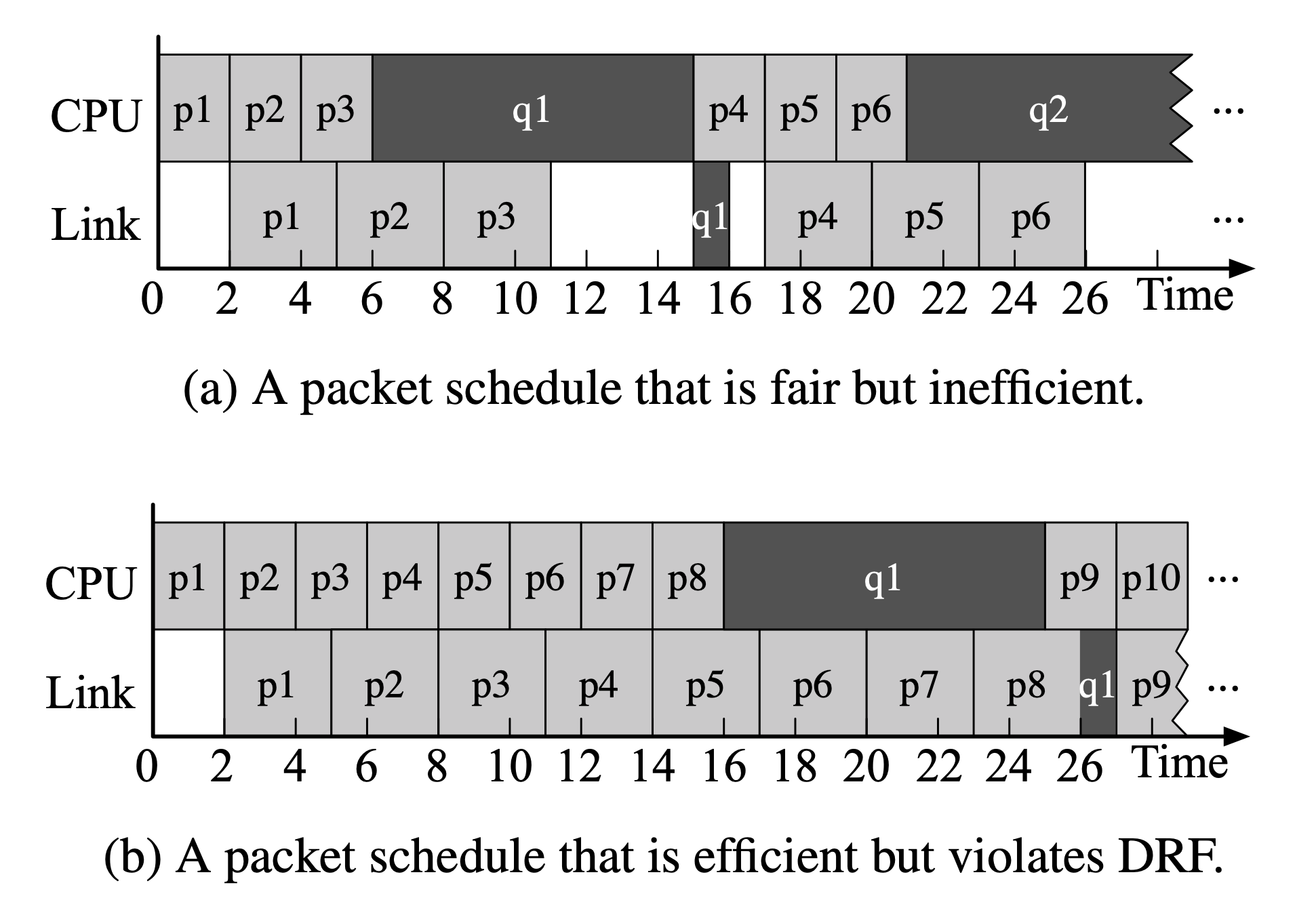

However, in the presence of multiple resources, fairness is often a conflicting objective against efficiency. To see this, consider two schedules below with two flows whose packets need CPU processing before transmission. Each packet in Flow 1 has a processing time vector ⟨2, 3⟩, meaning that it requires 2 time units for CPU processing and 3 time units for transmission; each packet in Flow 2 has a processing time vector ⟨9, 1⟩. The dominant resource of Flow 1 is link bandwidth, as it takes more time to transmit a packet than processing it using CPU; similarly, the dominant resource of Flow 2 is CPU. To achieve DRF, the transmission time Flow 1 receives should be approximately equal to the CPU processing time Flow 2 receives. In this sense, Flow 1 should schedule three packets whenever Flow 2 schedules one, so that each flow receives 9 time units to process its dominant resource, as shown in Fig. (a). This schedule, though fair, leads to poor bandwidth utilization—the link is idle for 1/3 of the time. On the other hand, Fig. (b) shows a schedule that achieves 100% CPU and bandwidth utilization by serving eight packets of Flow 1 and one packet of Flow 2 alternately. The schedule, though efficient, violates DRF. While Flow 1 receives 24/25 of the link bandwidth, Flow 2 receives only 9/25 of the CPU time.

Figure: An example showing the tradeoff between fairness and efficiency for multi-resource packet scheduling. Flow 1 sends packets p1, p2, $\ldots,$ each having a processing time vector ⟨2, 3⟩; Flow 2 sends packets q1, q2, $\ldots,$ each having a processing time vector ⟨9, 1⟩. Schedule (a) achieves DRF but is inefficient; Schedule (b) is efficient but unfair.

The fairness-efficiency tradeoff shown in the example above generally exists for multi-resource packet scheduling, but it has received little attention before. Existing multi-resource queueing algorithms focus solely on fairness. However, for applications having a loose fairness requirement, trading off some fairness for higher efficiency and higher throughput is well justified. In general, depending on the underlying applications, a network operator may weigh fairness and efficiency differently. Ideally, a multi-resource queueing algorithm should allow network operators to flexibly specify their tradeoff preference and implement the specified tradeoff by determining the “right” packet scheduling order.

Our subsequent work makes some progress towards achieving this objective, where we propose an efficient packet scheduling algorithm to strike a desirable balance between fairness and efficiency. Experimental results based on both real-world implementation and trace-driven simulation suggest that a slight fairness compromise can potentially improve the throughput to the point where the system capacity is almost saturated. Authored by Wei Wang, this work has been published in the Proceedings of ACM CoNEXT 2014.